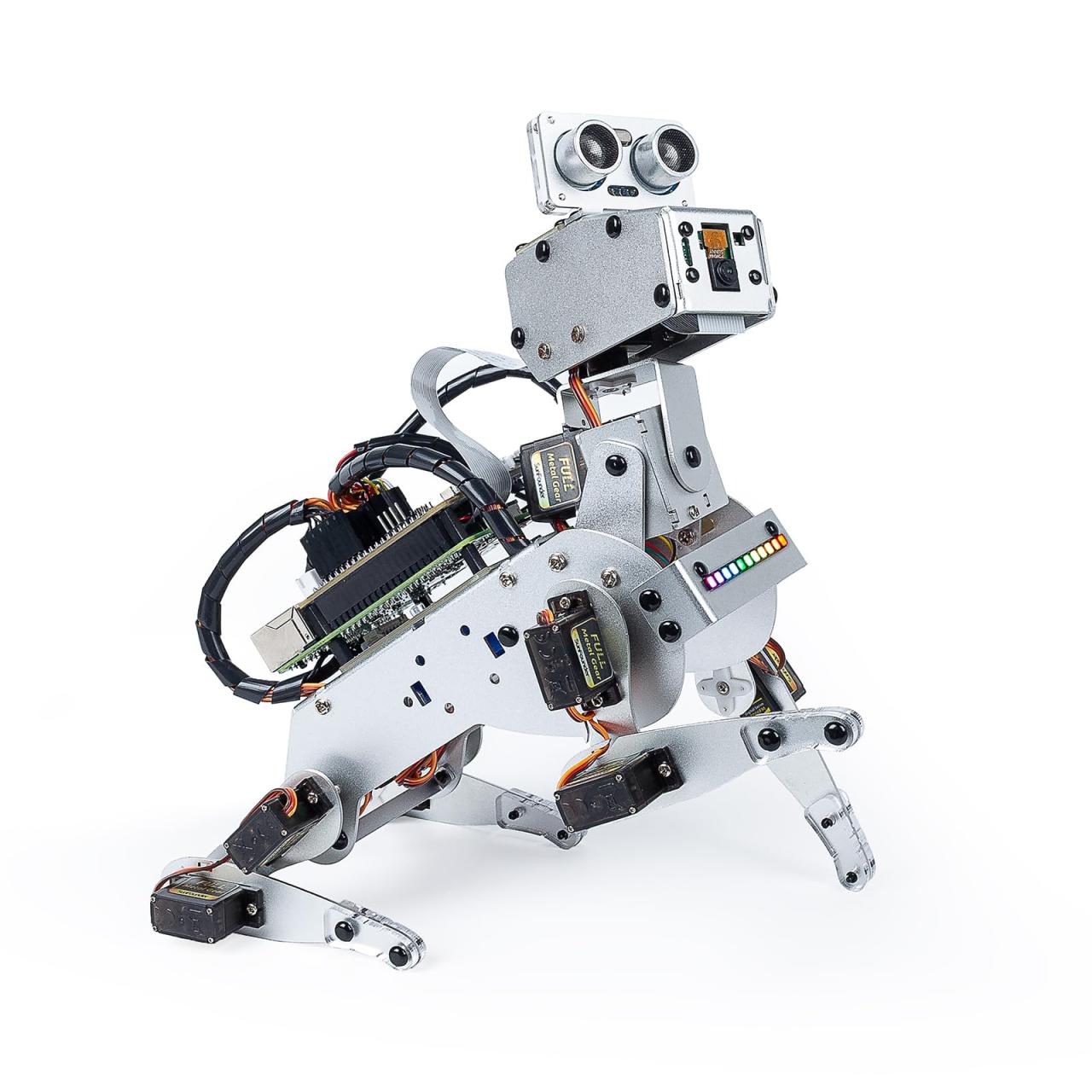

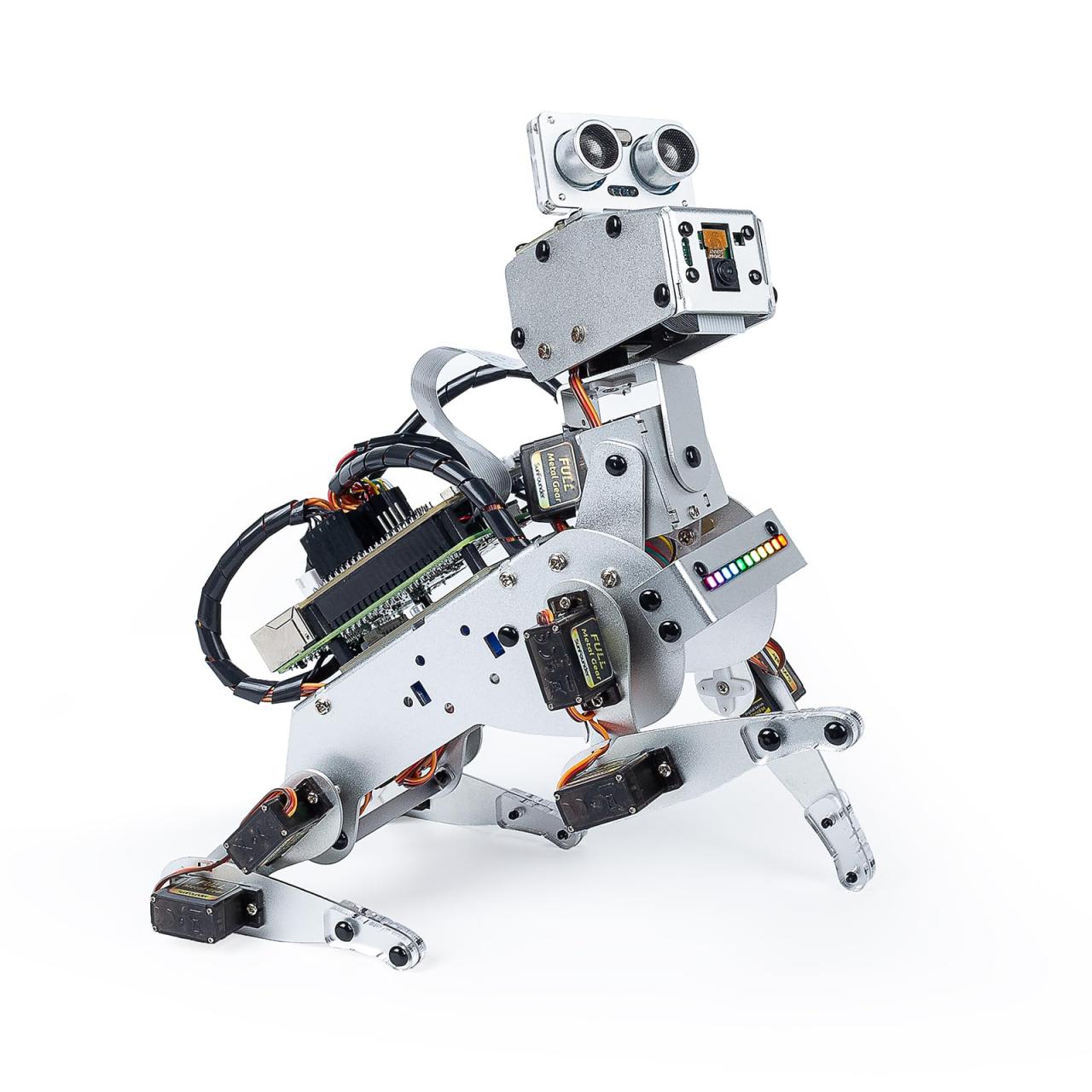

This advanced robotic dog, built with ROS2 and ROS1, offers a sophisticated blend of cutting-edge AI, voice commands, and visual scene understanding. The PuppyPi Standard Kit, integrated with a powerful Raspberry Pi 5, empowers users with exceptional programmability and control. This comprehensive package provides a captivating platform for exploration and interaction, transforming the user experience.

The robot’s bionic quadruped design ensures fluid and natural movements. Detailed technical specifications, including processor, memory, and sensor information, are presented to provide a clear picture of its capabilities. This comprehensive overview will walk you through the key features, programming, and applications of this remarkable robotic dog.

Product Overview

This robotic dog boasts a sophisticated blend of cutting-edge technology and engaging design. Leveraging the power of ROS2 and ROS1, along with a Raspberry Pi 5 and advanced AI capabilities, it offers a unique blend of programmable dexterity and intuitive control. The PuppyPi Standard Kit, enhanced with the processing power of the Raspberry Pi 5 8GB, delivers a powerful platform for vision-based scene understanding and voice command responsiveness.This programmable bionic quadruped is designed for both educational and entertaining purposes.

Its advanced functionalities extend beyond simple movements, allowing for a deeper understanding of robotics and AI principles. The included components provide a comprehensive framework for building, programming, and exploring the intricacies of robotics.

Robot Dog Features

The robotic dog’s design incorporates a robust framework, enabling a range of movements and interactions. Key features include real-time scene understanding through integrated vision systems, enabling adaptive responses to environmental stimuli. Voice commands provide an intuitive interface for controlling the robot’s actions, facilitating interaction and play. The platform’s open-source nature ensures a flexible and customizable experience, encouraging exploration and experimentation.

PuppyPi Standard Kit Components

The PuppyPi Standard Kit, combined with the Raspberry Pi 5 8GB, provides the essential hardware and software components for assembling and operating the robot dog. The package includes a complete set of actuators, sensors, and electronic components, facilitating rapid assembly and integration. It is pre-configured with necessary software for easier initial setup.

Programmable Bionic Quadruped Design

The bionic quadruped design replicates the natural gait and movement of a canine companion. This allows for intricate and realistic movements, enabling a wider range of interactions and responses. The design’s modular nature allows for easy customization and expansion of capabilities. This allows for further experimentation and expansion, as more advanced features and functionalities are added.

Key Specifications

| Specification | Details |

|---|---|

| Processor | Raspberry Pi 5 8GB |

| Memory | 8GB RAM |

| Sensors | Vision sensors, IMU, touch sensors, ultrasonic sensors, etc. |

| Communication Protocols | ROS2, ROS1, WiFi, Bluetooth |

| Operating System | Linux-based (ROS) |

Technical Specifications

This section delves into the technical underpinnings of the robotic dog, exploring its operating system, processing power, AI model, sensor suite, control architecture, and a comparative analysis with similar models. Understanding these details provides insight into the robot’s capabilities and potential applications.The robotic dog leverages cutting-edge technologies to achieve advanced functionalities. The combination of a powerful processor, sophisticated AI, and a robust sensor array allows for complex interactions with its environment.

Operating System and Processing Power

The robotic dog employs a dual-OS architecture, utilizing both ROS1 and ROS2. This allows for compatibility with existing ROS1 libraries and the more modern, scalable features of ROS2. The Raspberry Pi 5 Model with 8GB of RAM provides ample processing power to handle the computational demands of the operating system, sensor data processing, and AI inference. This configuration is sufficient for real-time operation and responsiveness.

AI Model

The robotic dog integrates a pre-trained Kami AI model for natural language processing and scene understanding. This enables the robot to interpret voice commands and react accordingly. This model’s adaptability allows for a wide range of commands and interactions.

Sensor Suite

The robotic dog incorporates a diverse array of sensors for perception and action. This includes but is not limited to:

- Camera System: A high-resolution camera system provides visual input for scene understanding, object detection, and navigation. This allows the robot to identify and track objects in its surroundings.

- Inertial Measurement Unit (IMU): The IMU measures acceleration, angular velocity, and orientation. This is crucial for maintaining balance and accurate movement.

- Ultrasonic Sensors: Ultrasonic sensors provide range data for obstacle avoidance and proximity detection. This ensures the robot can safely navigate its environment.

- Force Sensors: Embedded force sensors in the legs facilitate precise control and allow the robot to adapt to different terrains.

- Microphone Array: A microphone array provides high-fidelity audio input for accurate voice command recognition and environmental sound analysis. This enables the robot to understand and respond to voice commands effectively.

Control System Architecture

The control system architecture of the robotic dog is built around the ROS framework. The software stack manages communication between the different components, ensuring smooth and coordinated operation.

- ROS2/ROS1 Integration: The integration of both ROS1 and ROS2 allows for seamless transition and compatibility between different ROS libraries. This ensures compatibility with a wide range of tools and libraries.

- Modular Design: The modular design allows for easy customization and expansion of functionalities. This enables future development and integration of new features.

Comparative Analysis

| Feature | PuppyPi Standard Kit | Other Similar Robotic Dogs |

|---|---|---|

| Operating System | ROS2/ROS1 | ROS2, or custom OS |

| Processing Power | Raspberry Pi 5 8GB | Raspberry Pi 4, NVIDIA Jetson Nano |

| AI Model | Kami | Various pre-trained models |

| Sensor Suite | Camera, IMU, Ultrasonic, Force, Microphone | Varied, may include LIDAR, GPS |

| Control Architecture | ROS based | Various, custom architectures |

This table provides a simplified comparison, highlighting key differences and similarities between the PuppyPi Standard Kit and other commercially available robotic dogs. Specific capabilities and functionalities may vary depending on the particular model.

Programming and Customization

This section details the tools and techniques for customizing the robot’s behavior. The PuppyPi, with its ROS2 and ROS1 framework, provides a robust platform for developers to tailor the robot’s actions. From basic movements to complex interactions, the programmable nature empowers users to adapt the robot to their specific needs.

Programming Environment and Tools

The programming environment is centered around the ROS2 and ROS1 ecosystems. This allows for a modular and scalable approach to robot control. Essential tools include a text editor (e.g., VS Code), a terminal for command-line interactions, and a graphical user interface (GUI) for visual debugging and monitoring. The ROS2 and ROS1 packages offer a comprehensive set of libraries and tools for handling tasks such as navigation, sensor data processing, and communication.

APIs and Libraries

The robot utilizes a range of APIs and libraries, drawing on the power of the ROS ecosystem. These tools facilitate interactions with various components, including the robot’s motors, sensors, and communication interfaces. Key APIs include those for controlling the robot’s joints, processing sensor data, and handling voice commands. The use of these APIs simplifies the programming process by abstracting away the low-level details of the robot’s hardware.

The libraries are well-documented, enabling developers to quickly learn and integrate new functionalities.

Example Custom Program: Robot Movement

A basic program for controlling the robot’s movements involves setting target positions for the robot’s joints. Using ROS2 or ROS1, a user can define specific angles for each leg and command the robot to move accordingly. A Python script, for example, would utilize the appropriate ROS APIs to send these commands. This approach allows for precise control of the robot’s movements, from simple forward/backward steps to complex maneuvers.

Example (Conceptual Python): “`pythonimport rclpyfrom rclpy.node import Nodefrom geometry_msgs.msg import Twistclass RobotMovement(Node): def __init__(self): super().__init__(‘robot_movement_node’) self.cmd_vel_publisher = self.create_publisher(Twist, ‘cmd_vel’, 10) self.timer = self.create_timer(0.1, self.move_robot) def move_robot(self): msg = Twist() msg.linear.x = 0.5 # Forward movement self.cmd_vel_publisher.publish(msg)rclpy.spin(self)rclpy.shutdown()“`

Simple Programming Task Steps

This table Artikels the steps involved in a basic programming task, using voice recognition and object detection as examples.

| Task | Step 1 | Step 2 | Step 3 |

|---|---|---|---|

| Voice Recognition | Initiate voice recognition service. | Process audio input from microphone. | Match audio to commands (e.g., “move forward”). |

| Object Detection | Start camera feed and image processing. | Use pre-trained object detection models (e.g., YOLO). | Identify and locate objects in the scene. |

Voice Functionality

This robotic dog boasts voice command capabilities, allowing users to interact with it through spoken instructions. This feature enhances user experience by providing a more natural and intuitive method of control. The system’s ability to process voice commands opens up exciting possibilities for a wider range of applications and interactions with the robot.The robot utilizes a sophisticated voice recognition engine to convert spoken words into actionable commands.

This engine is designed to be robust, handling variations in accents, speech patterns, and background noise. This allows for a more reliable and user-friendly voice interaction experience.

Voice Recognition Engine

The voice recognition engine employed is a state-of-the-art speech-to-text algorithm. This algorithm leverages machine learning models trained on extensive datasets of spoken language, allowing it to accurately transcribe and interpret a wide range of voice commands. The system’s accuracy is further enhanced by noise cancellation and speaker adaptation techniques.

Supported Voice Commands and Actions

The robot supports a range of voice commands designed for various functionalities. These commands are categorized for easier understanding and use. Users can interact with the robot in a natural, conversational way, making the experience more intuitive and enjoyable.

Voice Commands, Actions, and Expected Responses

| Voice Command | Action | Expected Response |

|---|---|---|

| “Walk forward” | Robot moves forward | Robot begins walking forward |

| “Turn left” | Robot turns left | Robot rotates left |

| “Turn right” | Robot turns right | Robot rotates right |

| “Stop” | Robot stops all movement | Robot halts movement |

| “Fetch ball” | Robot navigates to and picks up a designated ball | Robot moves towards the ball, picks it up, and returns. |

| “Play music” | Robot plays music from a pre-set playlist | Robot starts playing music |

| “Show me the time” | Robot displays the current time | Robot displays the time on its screen/LEDs |

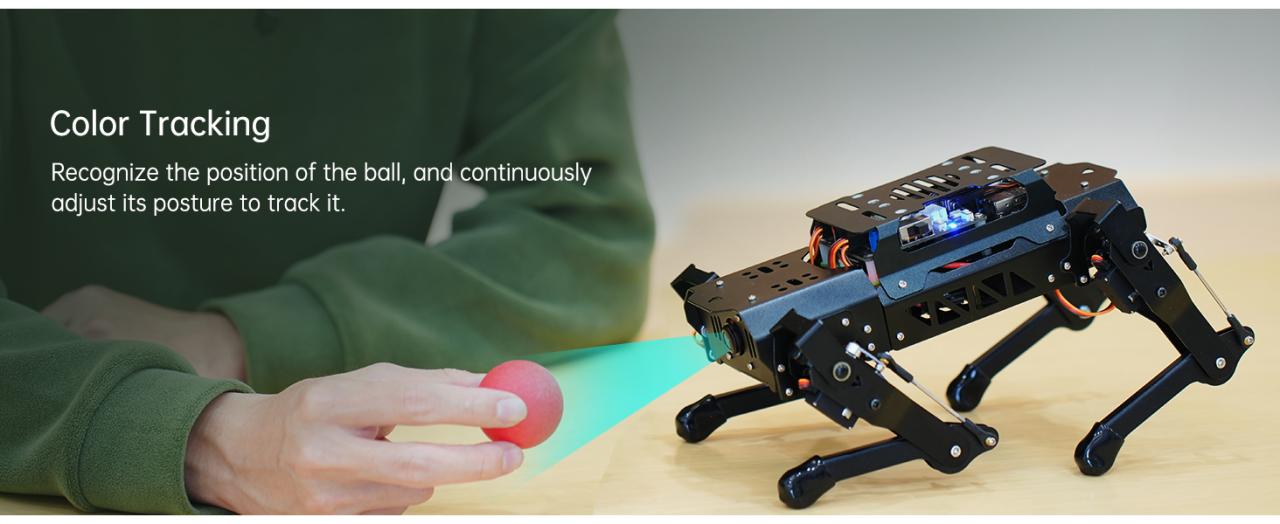

Vision System and Scene Understanding

The PuppyPi robotic dog’s vision system plays a crucial role in enabling its interaction with the environment. It allows the robot to perceive and interpret visual information, enabling navigation, object recognition, and adaptive behavior. This sophisticated system allows for a wide range of applications, including obstacle avoidance, object tracking, and even rudimentary scene understanding.The core of this system relies on computer vision algorithms that process images from the robot’s camera to extract meaningful information about the surrounding scene.

This processing encompasses several steps, from image acquisition to object recognition, allowing the robot to understand the world around it. The quality of the vision system directly impacts the robot’s overall performance and capabilities.

Computer Vision Algorithms

The PuppyPi employs a suite of computer vision algorithms to analyze images captured by its onboard camera. These algorithms are specifically designed for real-time processing, allowing the robot to respond quickly to changes in its environment. The algorithms include image processing, object detection, and object recognition techniques, each designed to achieve a specific task.

Image Processing Steps for Object Detection, Tracking, and Recognition

A multi-stage approach is employed for object detection, tracking, and recognition. Initial image processing steps involve preprocessing to enhance image quality. This can include noise reduction, contrast adjustment, and color conversion. Subsequent steps involve feature extraction, where relevant characteristics of the object are isolated from the image. These features are then used for object detection, where the system identifies potential objects within the image.Further steps include object tracking, which maintains a record of the identified objects across successive frames, and object recognition, where the detected objects are categorized and identified.

Sophisticated object recognition algorithms, including Convolutional Neural Networks (CNNs), are used to classify objects with high accuracy.

- Image Preprocessing: This stage prepares the raw image data for further processing. Techniques such as noise reduction, edge detection, and color correction enhance image clarity, crucial for subsequent analysis. For example, noise reduction filters out extraneous visual information, like camera artifacts, to produce cleaner images.

- Feature Extraction: This step identifies distinctive characteristics of objects. These features can be edges, corners, textures, or other patterns, depending on the specific object being tracked. For instance, recognizing a ball might involve extracting its circular shape and color.

- Object Detection: This stage locates potential objects within the image based on the extracted features. Various techniques, like template matching and object detection algorithms, identify the presence of objects. A common example is detecting a person standing in front of the robot using a trained object detector.

- Object Tracking: This is a crucial aspect of understanding the dynamic environment. The system tracks objects across multiple frames to maintain their identification and location. For example, tracking a moving ball requires maintaining its identity through several consecutive images.

- Object Recognition: This final step classifies and identifies the detected objects. Advanced algorithms like Convolutional Neural Networks (CNNs) are often employed to achieve high accuracy in object recognition. For example, recognizing a specific type of toy would involve categorizing it based on its visual characteristics.

Examples of Robot Perception and Reaction

The PuppyPi can perceive and react to its environment in various ways. For instance, it can detect and avoid obstacles by recognizing shapes and patterns. It can also track moving objects, such as a toy or a person, using sophisticated object tracking algorithms. Furthermore, the system allows the robot to react appropriately to specific commands and instructions.

Applications and Use Cases

This robotic dog offers a versatile platform with a wide array of potential applications, transcending simple entertainment. Its advanced capabilities in vision, voice recognition, and programming make it a compelling tool for education, research, and even everyday tasks. Its adaptability and programmability make it a valuable asset for diverse use cases.This section explores the various ways this robotic dog can be deployed, from interactive learning environments to advanced research platforms, and how it can potentially revolutionize the way we approach tasks in the home and beyond.

Specific examples will illustrate the robot’s practical applications.

Educational Applications

This robotic dog can be a powerful tool in educational settings. Its interactive nature makes it an engaging platform for teaching programming concepts, robotics principles, and even basic AI. Students can learn by interacting with the robot, observing its actions, and modifying its behavior through programming. Visual programming interfaces allow learners to create simple routines and complex algorithms, fostering a deeper understanding of how robots function.

Research Applications

The robot’s capabilities extend to research settings. Its advanced sensors and processing capabilities make it a valuable tool for studying animal behavior, human-robot interaction, and environmental monitoring. Researchers can use the robotic dog to collect data in real-time, analyze its behavior in specific situations, and gain insights into various fields. For example, it could be used to monitor animal habitats in remote locations or assist with tasks in hazardous environments.

Furthermore, the robot’s ability to navigate and interact with its environment provides a valuable research platform.

Entertainment Applications

Beyond educational and research contexts, the robotic dog can offer engaging entertainment experiences. Its interactive features and customizable behaviors can be programmed to provide a variety of entertaining experiences. These interactions can stimulate learning and promote creativity, creating a platform for diverse and personalized entertainment. The robot’s ability to respond to voice commands and perform tricks can make it a fun companion for all ages.

Home Automation and Security

The robotic dog can be incorporated into home automation systems to automate various tasks and enhance security. For example, it can be programmed to perform tasks like opening doors, turning lights on/off, and monitoring the environment. In a security context, it could patrol the home, detecting unusual activity and alerting the owner. Its voice recognition capabilities can further enhance its security functions, enabling voice-activated control.

Specific Tasks

The robotic dog can perform a variety of tasks depending on its programming. Examples include:

- Obstacle avoidance: The robot can navigate complex environments while avoiding obstacles. This is a crucial skill for various applications, including home automation, security, and research.

- Object recognition: The robot can recognize and identify different objects in its environment, which is useful for tasks such as inventory management, sorting, and environmental monitoring.

- Voice command execution: The robot can execute tasks based on voice commands, enabling hands-free operation and seamless interaction with the user.

- Environmental monitoring: The robot can collect data about the environment, such as temperature, humidity, and air quality, which can be used for research and monitoring purposes.

The wide range of potential applications and tasks make this robotic dog a versatile tool for various sectors, including education, research, and entertainment. Its adaptability and programmability offer immense potential for innovation and development.

Hardware and Software Setup

This section details the crucial steps for setting up the robotic dog’s hardware and software environment. A robust setup ensures the robot functions as intended, enabling interaction with the various features. Successful configuration allows for seamless integration of the Raspberry Pi 5, sensors, and the ROS2 framework.

Hardware Setup

The hardware setup involves connecting the various components and ensuring proper functionality. The process begins with physically mounting the sensors to the robot’s chassis, ensuring correct alignment and secure connections. Thorough testing of sensor readings is essential before proceeding to the software setup.

- Raspberry Pi 5 Connection: The Raspberry Pi 5 serves as the central processing unit. Connect it to the power supply, and then to the necessary peripherals like the Wi-Fi module. Confirm stable power supply and internet connectivity before proceeding to the next step.

- Sensor Integration: Attach the sensors (e.g., cameras, IMUs, ultrasonic sensors) according to the provided schematics. Secure the connections and ensure all sensor cables are properly routed and protected. This ensures accurate data transmission from the sensors.

- Power Management: Carefully consider the power requirements for each component. Ensure adequate power delivery to all sensors and the Raspberry Pi. Over- or under-voltage can cause sensor failure or damage the Raspberry Pi. Use a stable and reliable power supply.

Software Installation

The software installation process involves setting up the ROS2 environment and installing necessary libraries. This crucial step allows the robot to interpret sensor data and execute commands. Careful attention to detail is required during this process.

- ROS2 Installation: Install the ROS2 distribution compatible with the Raspberry Pi 5. Follow the official ROS2 installation guide for your specific distribution. This includes setting up the necessary packages and dependencies for the robot.

- Library Installation: Install the required libraries for the specific sensors used. These libraries translate sensor readings into usable data for the ROS2 system. Verify that the installed libraries are compatible with the ROS2 version.

- Configuration: Configure the ROS2 environment, including setting up launch files and parameters for the robot’s sensors. Proper configuration is critical for the robot to function as intended. Carefully examine and adjust the configuration files for optimum performance.

Software Dependencies

The software relies on specific dependencies for its functionality. These dependencies are essential for the robot’s operation.

- ROS2: The robot’s core software relies on the Robot Operating System 2 (ROS2) framework. This provides the necessary tools for communication and control.

- Sensor Drivers: The specific drivers for the robot’s sensors (e.g., camera drivers, IMU drivers) are crucial for accurate data acquisition and interpretation.

- Additional Libraries: Other libraries, such as image processing libraries or machine learning libraries, may be required depending on the robot’s functionalities.

Hardware Components and Setup Steps

| Hardware Component | Setup Steps |

|---|---|

| Raspberry Pi 5 | Connect to power, Wi-Fi, and peripherals. Verify stable power and connectivity. |

| Sensors (Cameras, IMUs, etc.) | Attach to the robot chassis according to the schematics. Secure connections. Test sensor readings. |

| Power Supply | Ensure sufficient power delivery to all components. Use a stable and reliable power supply. |

Potential Limitations and Improvements

This robotic dog, while impressive, possesses inherent limitations that can be addressed through ongoing development and research. Understanding these limitations is crucial for realizing the full potential of this technology and shaping future advancements in the field of robotic quadruped development.Addressing limitations and pushing for improvements in the robot’s design, software, and functionalities will be key to creating a more sophisticated and versatile robotic companion.

Current Limitations in Perception and Navigation

The robot’s ability to perceive and navigate its environment is reliant on the accuracy and robustness of its sensors. Limited sensor accuracy, for example, in complex environments with occlusions or low-light conditions, may lead to errors in object recognition or path planning. The robot’s current processing capabilities may struggle with real-time, complex tasks involving dynamic environments. Real-world environments can introduce noise and inconsistencies, requiring more sophisticated algorithms for accurate and reliable performance.

Constraints in Dexterity and Manipulation

The current design of the robotic dog lacks the fine motor skills and dexterity necessary for tasks beyond basic locomotion. For example, complex manipulations or interactions with objects might be challenging or impossible. The robot’s paws, while designed for locomotion, may not be optimally suited for grasping or manipulating objects. Future improvements might involve incorporating more advanced actuators and sensors for increased dexterity.

Limitations in AI Capabilities

The robot’s AI capabilities are tied to the available datasets and algorithms. The robot’s ability to adapt to unexpected situations or learn from novel experiences is constrained by its current programming. The AI model might not always generalize well across different scenarios. For instance, a dog might be able to recognize a ball, but it may struggle with a slightly different shape or color of ball.

This limits the robot’s ability to respond in a truly intuitive and adaptable manner.

Future Features for Enhanced Capabilities

- Enhanced Scene Understanding: The robot could benefit from a more advanced computer vision system capable of identifying and categorizing objects with greater accuracy and precision. This might involve using more sophisticated algorithms or incorporating multiple sensor inputs to enhance the robot’s perception of its environment. Consider improvements to its ability to distinguish objects based on their size, shape, and texture.

- Improved Dexterity and Manipulation: Future designs could incorporate more advanced actuators and sensors to enhance the robot’s ability to interact with objects in a more precise and controlled manner. This might involve the use of more advanced grippers, improved joint control, and enhanced feedback systems. This would allow the robot to manipulate objects in more sophisticated ways, such as picking up small objects or performing more precise tasks.

- Advanced AI Learning and Adaptation: Incorporating reinforcement learning or other machine learning techniques could enable the robot to learn from its experiences and adapt to new situations. This would allow the robot to develop more nuanced understanding of its environment and develop more sophisticated responses.

- Voice Recognition and Natural Language Processing Improvements: Enhancements to the voice recognition system could increase accuracy and allow the robot to understand more complex commands and questions. Further advancements in natural language processing could enable the robot to respond more naturally and engage in more sophisticated conversations.

Epilogue

In conclusion, this programmable robotic dog, with its sophisticated AI capabilities, voice command interface, and vision system, represents a significant advancement in robotic technology. Its adaptability and potential applications in education, research, and entertainment make it an engaging and rewarding companion. While potential limitations exist, the future potential of this technology is promising.

Essential Questionnaire

What are the typical applications for this robotic dog?

This robotic dog can be utilized for educational purposes, scientific research, and entertainment. Its programmable nature allows for diverse applications, including tasks like home automation and security.

What programming languages are supported?

The robot utilizes ROS (Robot Operating System) libraries, enabling programming through Python and other compatible languages.

What is the typical setup process for the robot?

The setup involves installing the necessary hardware components, followed by software installation and configuration. Detailed instructions are provided in the user manual.

What are the limitations of this robotic dog?

Current limitations may include processing speed, the complexity of some programming tasks, and the need for further refinement in certain functionalities. Continuous development and improvements are expected.

What are the expected future features for this robotic dog?

Future enhancements might include improved navigation capabilities, more advanced object recognition, and enhanced interaction with complex environments.